Early Wednesday morning Beijing time, global cloud computing Amazon, the "number one" AWS announced its next-generation self-developed AI chip , which Wall Street has been eagerly anticipating, during the Re:Invent conference. Trainium 3 has been officially launched.

According to the company, Trainium 3 is Amazon's first 3nm process chip . Compared to the previous generation Trainium chip, Trainium 3 offers up to 4.4 times the computing performance, 4 times the energy efficiency, and almost 4 times the memory bandwidth, targeting the cost-effective AI computing power competition . UltraServer systems composed of Trainium 3 chips can also be interconnected, with each system accommodating 144 chips, providing up to 1 million Trainium 3 chips for a single application—10 times that of the previous generation.

The company stated that the cost of training and running AI models can be reduced by up to 50% compared to systems that also use graphics processing units (GPUs).

Of course, expecting Amazon Trainium 3 to compete with Google TPU, or even "challenge Nvidia," is unrealistic. Investors who bought the chip may be disappointed, since there is no information showing that the chip has any advantages other than "cost-effectiveness".

Amazon declined to disclose benchmark performance comparisons of its new AI chip with the latest products from Google and Nvidia , nor did it reveal power consumption parameters. Currently, we only know that each chip integrates 144GB of high-bandwidth memory. Google's latest Ironwood TPU has 192GB of storage, while Nvidia 's Blackwell GB300 can reach up to 288GB.

Ron Diamant, AWS Vice President and Chief Architect in charge of the Trainium project, also stated bluntly: " I don't think we will try to replace Nvidia ."

Diamant further stated that, ultimately, the biggest advantage of this self-developed chip is its cost-effectiveness. His main goal is to provide customers with more options for different computing workloads.

The biggest weakness of Amazon's AI chips is not the chips themselves, but Amazon's lack of a sufficiently deep and easy-to-use software library .

Apart from Amazon itself and Anthropic, an AI startup in which the company has invested heavily, it is now almost impossible to find any well-known companies using Trainium chips.

In October, Anthropic announced it would purchase up to 1 million Google TPUs, and in November, it announced an investment agreement with Nvidia to further purchase computing power from Nvidia chips. However, Anthropic also emphasized that Amazon remains its "major training partner and cloud service provider," and it expects to use more than one million Trainium 2 chips by the end of the year.

Using artificial intelligence Bedrock Robotics, which automates construction equipment, explains that while its infrastructure runs on AWS servers, it uses NVIDIA chips when building guided models for excavators. Chief Technology Officer Kevin Peterson summarizes, " We needed something that was both high-performance and easy to use, and that's NVIDIA. "

Amazon seems to have recognized this problem as well. In the announcement of Trainium 3, the company specifically emphasized that Trainium 4 is already under development, with its biggest highlight being " the ability to work with Nvidia chips ".

The company stated that Trainium4 will support NVIDIA NVLink Fusion high-speed chip interconnect technology , ultimately forming a cost-effective rack-mount AI infrastructure that combines GPUs and Trainium servers.

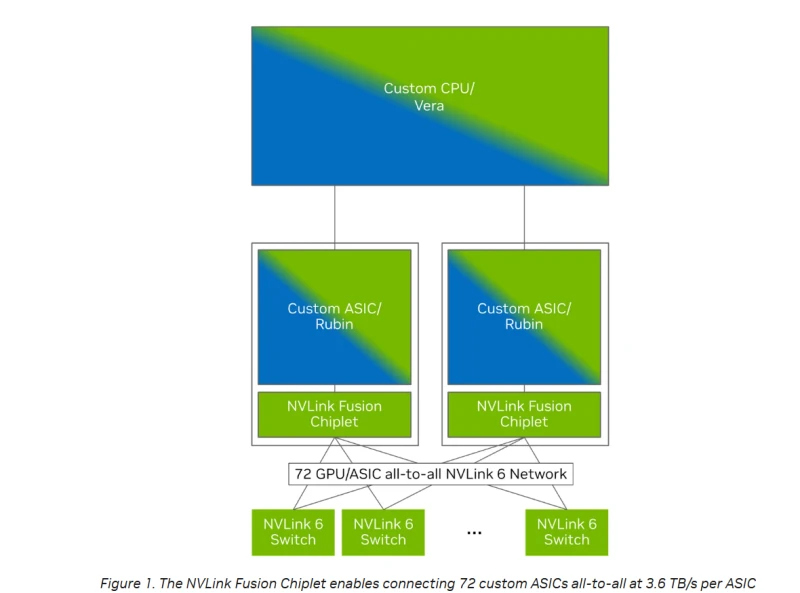

NVIDIA explains that the core of NVLink Fusion is the NVLink Fusion chiplet, which hyperscale cloud service providers can embed into their custom ASIC designs to connect NVLink scale interconnects and NVLink switches.

(The NVLink Fusion chipset enables full interconnection of 72 custom ASICs with 3.6 TB/s bandwidth per ASIC. Source: NVIDIA)

This means that systems based on Trainium 4 will be able to interoperate with NVIDIA GPUs and improve overall performance, while still using Amazon's own, lower-cost server rack technology. This will also make it easier to migrate large-scale AI applications built around NVIDIA GPUs to AWS.

(Article source: CLS)