e-commerce giant Amazon Continue in cloud computing Focusing on key areas, developing its own AI ( Artificial Intelligence) The new products were launched as scheduled.

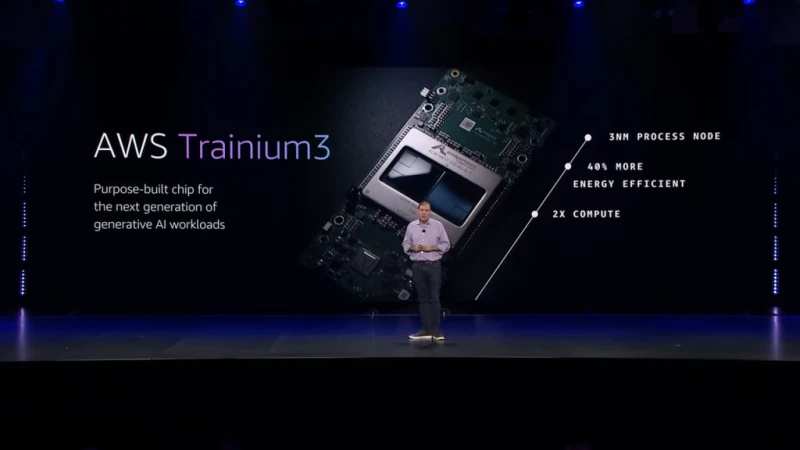

On December 2nd local time, Amazon Web Services (AWS) announced a series of new AI products at the 2025 re:Invent global conference, including a third-generation custom AI chip. The official launch of Trainium 3 and three new cutting-edge AI Agents. AWS CEO Matt Garman stated, "Trainium is now a multi-billion dollar business and is still growing rapidly."

Trainium is AWS's proprietary chip series designed for AI training and inference tasks, aiming to significantly reduce the overall cost of model training and deployment while maintaining high performance. AWS first announced Trainium 3 at last year's global conference.

According to reports, the Trainium 3, the company's first chip manufactured using a 3-nanometer process node, boasts four times the performance of its predecessor and reduces AI model training and operation costs by 40% compared to equivalent GPU systems. Each chip is equipped with 144 GB of HBM3E high-bandwidth memory . It provides 4.9 TB/s of memory bandwidth and can achieve intensive FP8 computing performance of slightly over 2.5 PFLOPS.

Building upon this, the Amazon EC2 Trn3 UltraServer, equipped with Trainium 3 chips, offers extremely high-density training computing power, accommodating up to 144 Trainium 3 chips, with a peak AI computing power of 362 PFLOPs (FP8 precision). At the FP8 level, the performance of these systems is roughly equivalent to NVIDIA's... The GB300 NVL72 system based on Blackwell Ultra is comparable, but there is still a significant gap at the FP4 level.

Trainium 3 is officially available. Source: AWS

Meanwhile, AWS confirmed that it is developing the next-generation Trainium 4 chip. This chip is expected to deliver a 6x improvement in computing performance, a 4x improvement in memory bandwidth, and a 2x increase in memory capacity at FP4 precision. AWS also revealed that, with the help of its partner NVIDIA , Trainium 4 will support NVIDIA 's NVLink Fusion high-speed interconnect technology, meaning that Trainium 4 will be able to work seamlessly with GPUs in NVIDIA's MGX racks, providing customers with more flexible hybrid architecture options.

Currently, several AWS partners have adopted Trainium 3. Decart, an AI-generated video company, stated that using Trainium 3 for real-time video generation inference speed increased by 4x, while the cost was only half that of using GPU-accelerated computation. Customers such as Anthropic and Ricoh have also successfully reduced training and inference costs by up to 50% by adopting Trainium chips.

On the other hand, AWS also revealed the latest progress of its "Project Rainier" initiative in collaboration with Anthropic: one year after its launch, the project has connected more than 500,000 Trainium2 chips, becoming one of the world's largest AI computing clusters, five times the size of the previous generation of models trained by Anthropic.

In addition, AWS launched three new cutting-edge AI agents at this conference, possessing autonomy and scalability, capable of working continuously for hours or even days without ongoing intervention. Among them, the Kiro Autonomous Agent can complete tasks independently and continuously learn during operation; the Amazon Security Agent acts as a security advisor in application design, code review, and penetration testing; and the Amazon DevOps Agent assists teams in resolving and preventing operational failures.

The continued release of new products in the Trainium series signifies Amazon's ongoing foray into the AI chip industry. In November, Google released its seventh-generation self-developed TPU (Tensor Processing Unit) chip, Ironwood. Subsequently, reports surfaced that Meta is considering deploying it in its data centers starting in 2027. The deployment of Google's TPUs, worth billions of dollars, caused Nvidia's stock price to plummet.

However, compared to Nvidia and Google, Amazon's Trainium chip lacks a deep and easy-to-use software library. Aside from Amazon itself and its heavily invested AI startup Anthropic, there are no other well-known companies that have adopted Trainium chips on a large scale. This may explain why Amazon is highlighting support for Nvidia's NVLink as a major feature of its next-generation chip.

In an interview with foreign media, Dave Brown, Vice President of Computing and Machine Learning at AWS, said, "Diversity in the AI chip market is a good thing... Our customers want to continuously obtain stronger computing power and higher performance, and more importantly, at lower prices." Meanwhile, the AWS Vice President and Chief Architect in charge of the Trainium project stated bluntly, "I don't think we will try to replace Nvidia."

On the 2nd, Amazon (Nasdaq: AMZN) rose 0.23% to close at $234.42 per share, with a total market capitalization of $2.51 trillion.

(Source: The Paper)